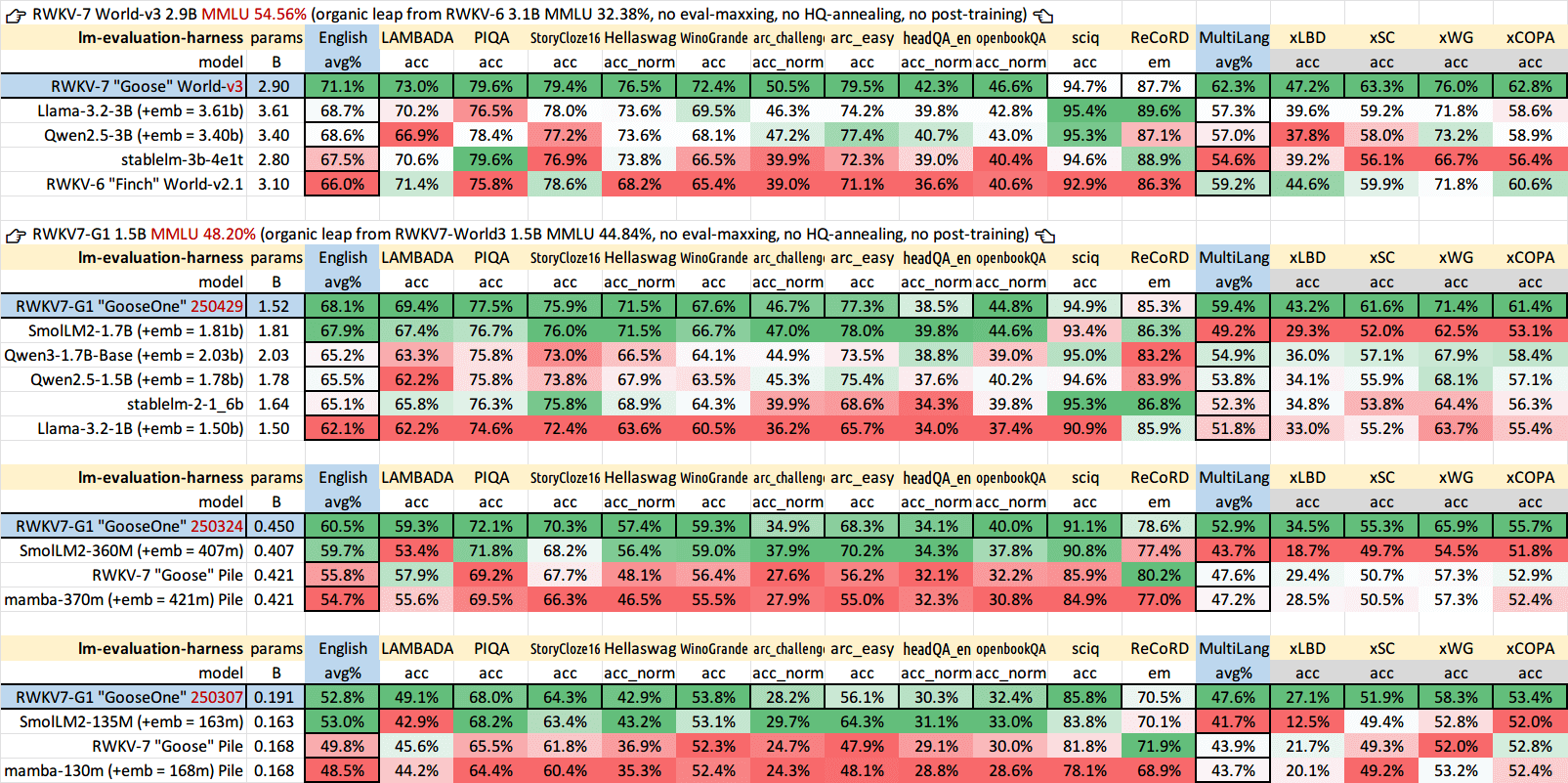

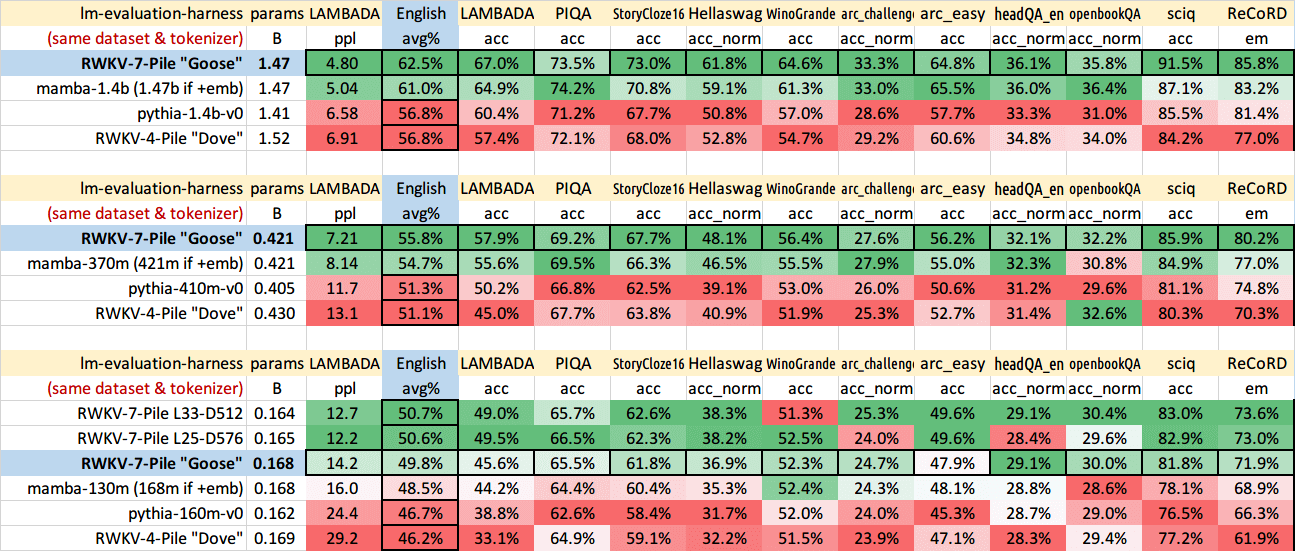

RWKV (pronounced RwaKuv) is an RNN with great LLM performance and parallelizable like a Transformer. Check RWKV-7 "Goose" reasoning models.

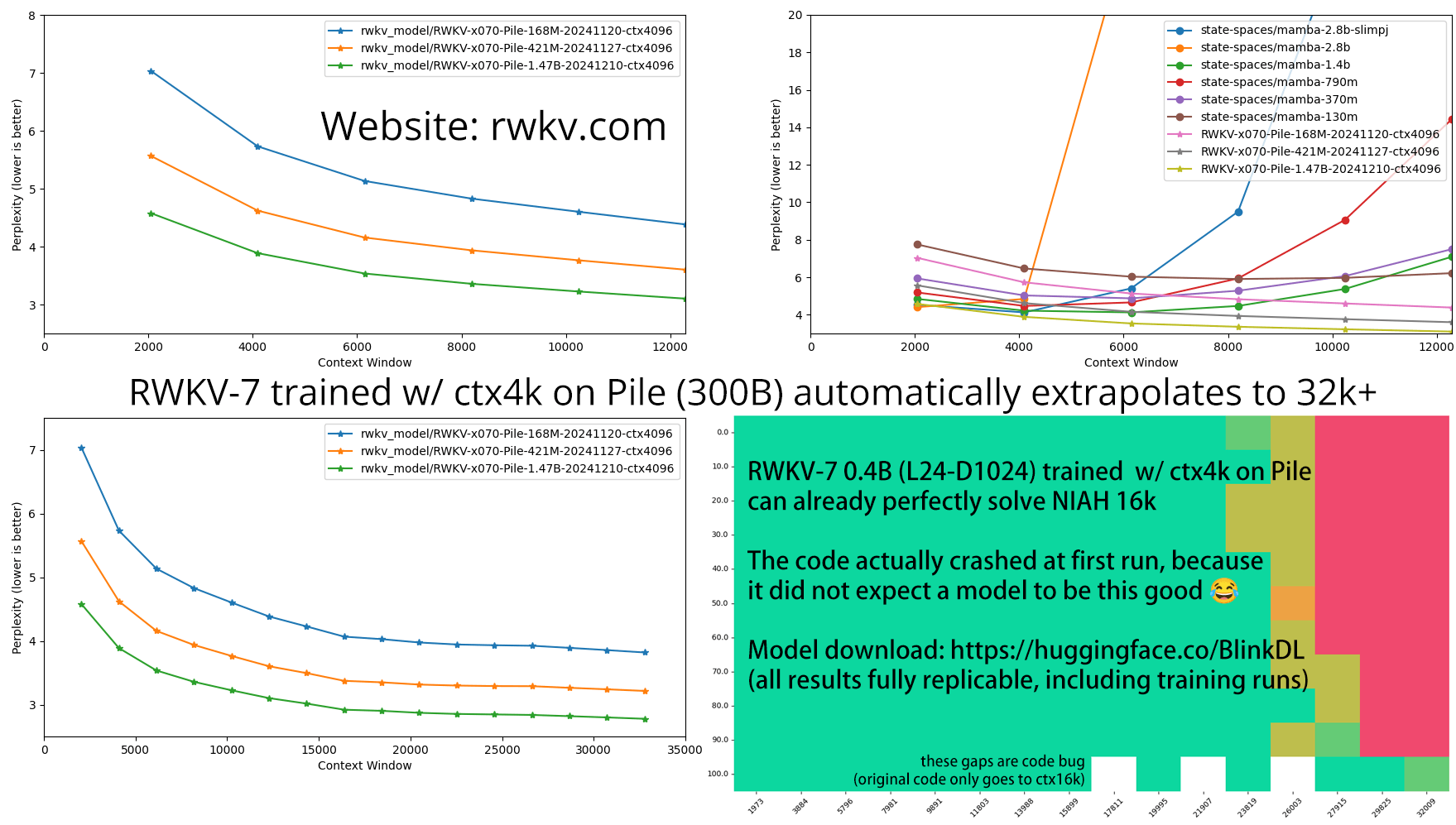

It's combining the best of RNN and transformer - great performance, linear time, constant space (no kv-cache), fast training, infinite ctxlen, and free text embedding. And it's 100% attention-free, and a Linux Foundation AI project.

RWKV-Projects

RWKV-LM

Training RWKV (and latest developments)

RWKV App

Run local RWKV 0.1~7B on your phone (Android, iOS)

Albatross

Very efficient inference (7B fp16 bsz960 = 10250+ tps on 5090)

RWKV-Runner

RWKV GUI with API (PC)

RWKV pip package

RWKV pip reference (slower) package

RWKV-PEFT

Finetuning RWKV (9GB VRAM can finetune 7B)

RWKV-server

WebGPU inference (NVIDIA/AMD/Intel), nf4/int8/fp16

More... (600+ RWKV projects)

Misc

RWKV weights

All latest RWKV weights

RWKV Ollama weights

Ollama GGUF RWKV weights

RWKV-related papers

RWKV wiki

with history of RWKV from v1 to v7 (note: AI-written)

RWKV-Papers

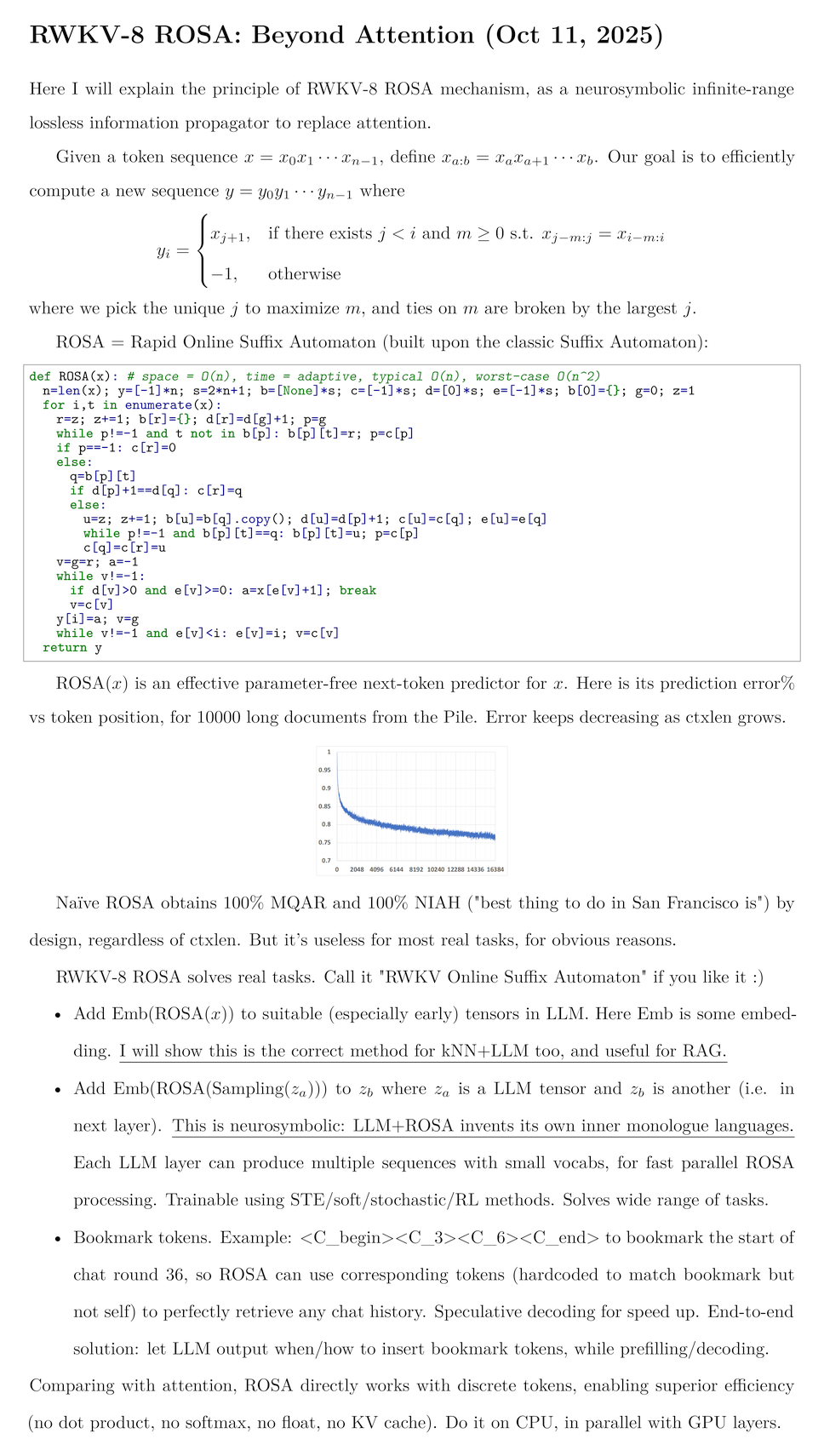

RWKV-8 explained

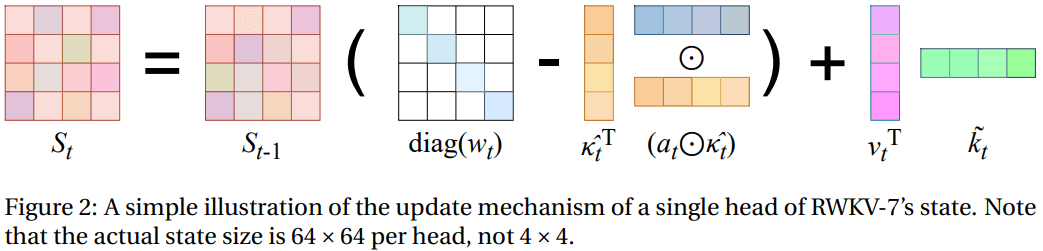

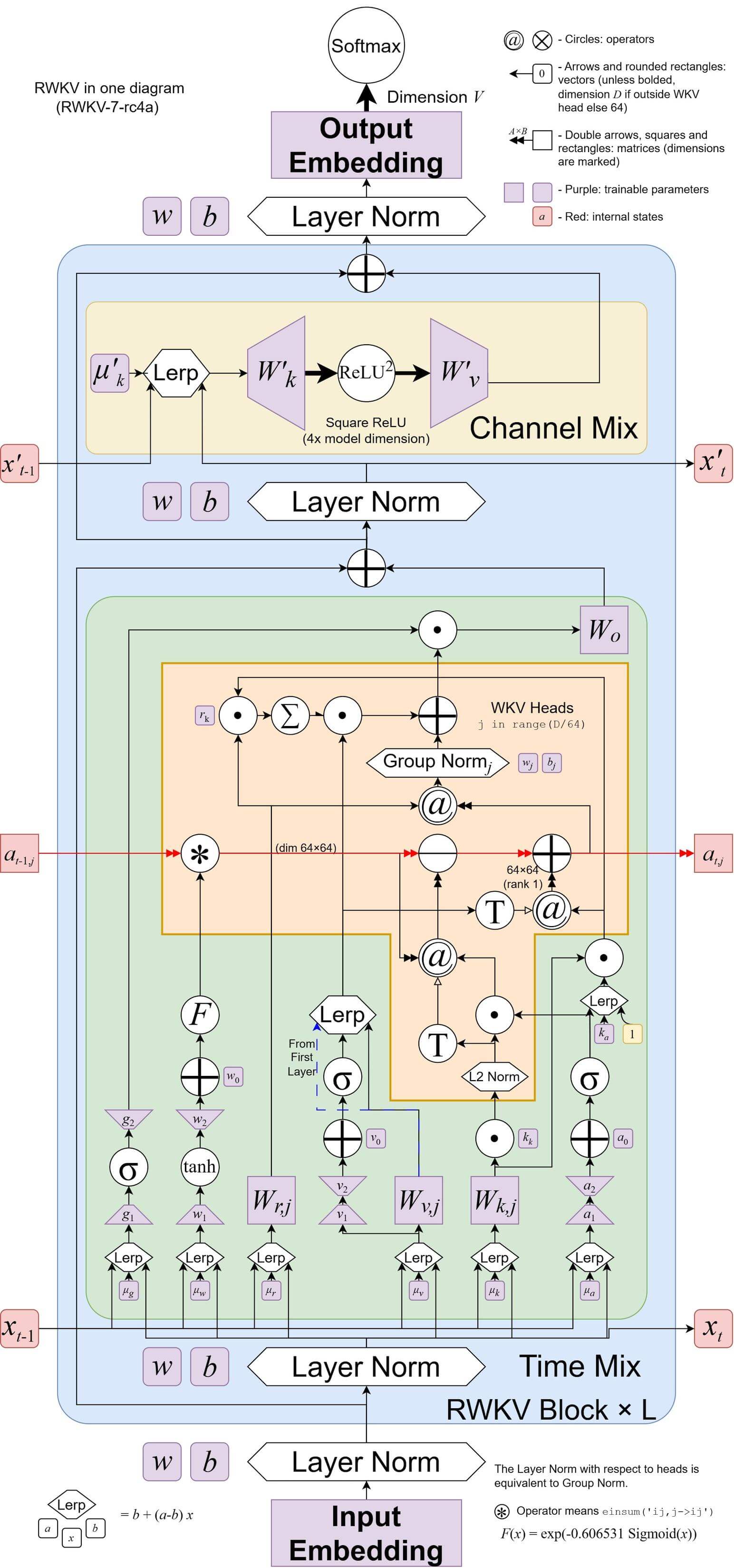

RWKV-7 explained

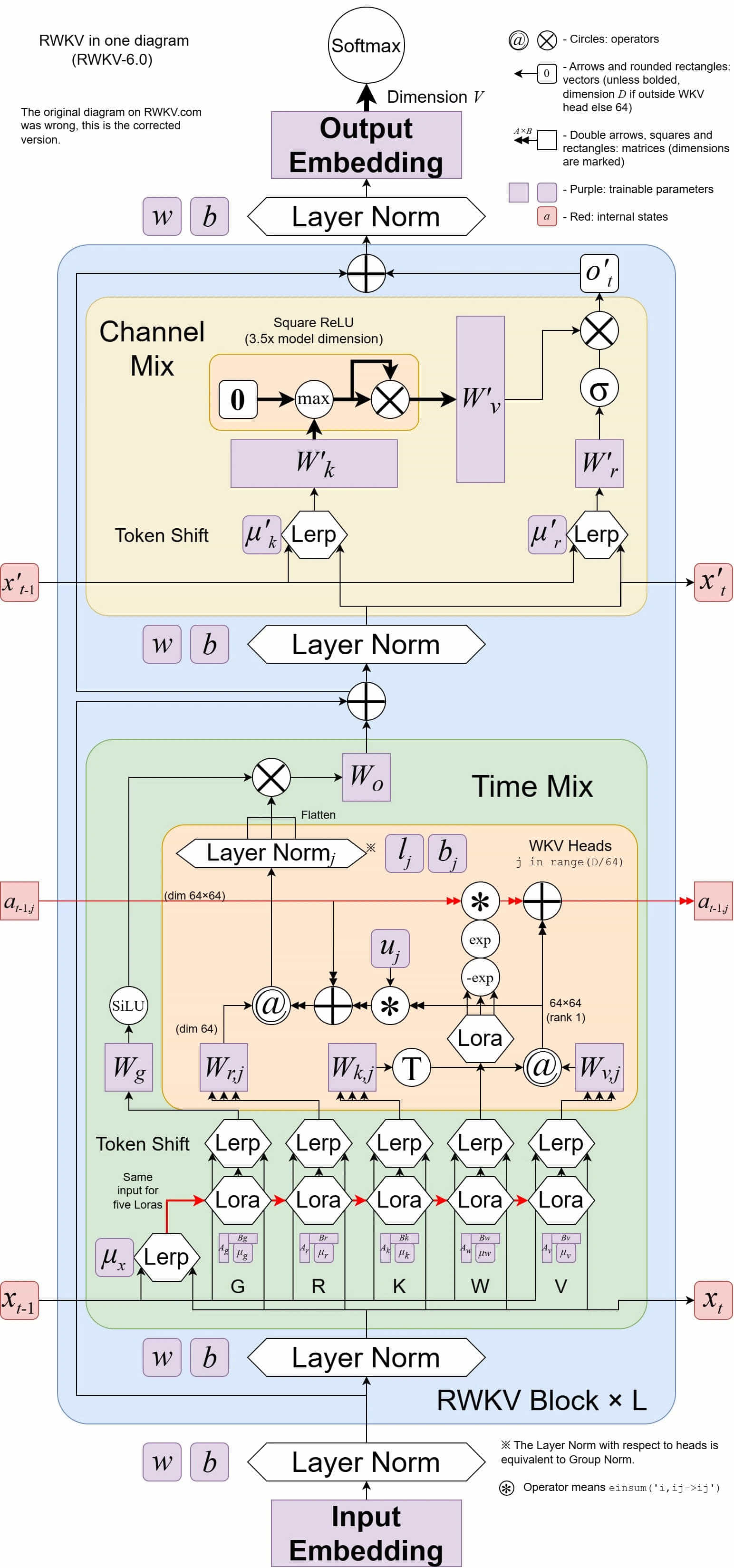

RWKV-7 illustrated

RWKV-6 illustrated