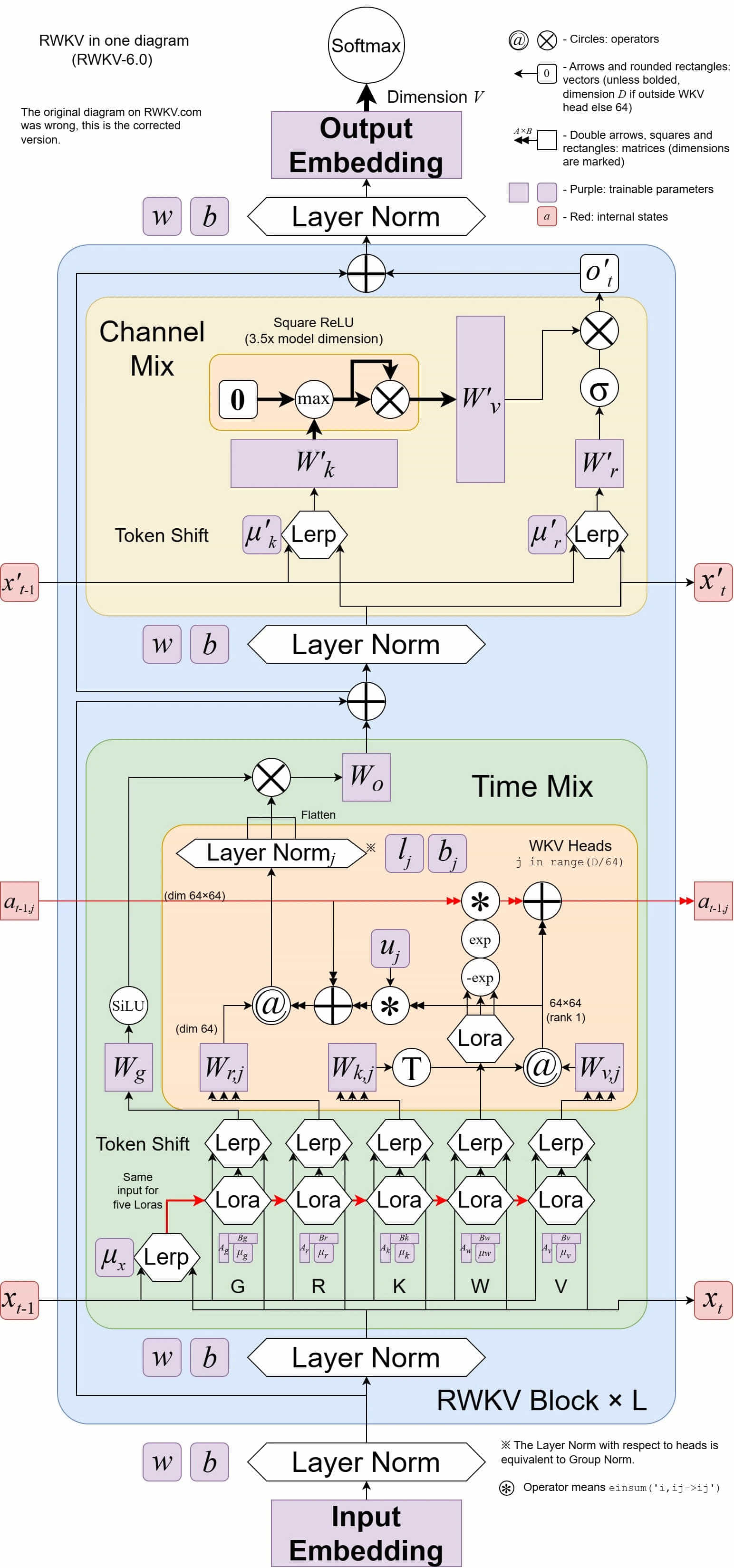

RWKV (pronounced RwaKuv) is an RNN with GPT-level LLM performance, and can also be directly trained like a GPT transformer (parallelizable). We are at RWKV v6.

So it's combining the best of RNN and transformer - great performance, fast inference, fast training, saves VRAM, "infinite" ctxlen, and free text embedding. Moreover it's 100% attention-free, and a LFAI project.

Projects

RWKV-LM

Training RWKV

RWKV-Runner

RWKV GUI with one-click install and API

RWKV pip package

Official RWKV pip package

RWKV-PEFT

Finetuning RWKV (9GB VRAM can finetune 7B)

RWKV-server

Fast WebGPU inference (NVIDIA/AMD/Intel), nf4/int8/fp16

RWKV.cpp

Fast CPU/cuBLAS/CLBlast inference, int4/int8/fp16/fp32

Misc

RWKV raw weights

All latest RWKV weights

RWKV weights

HuggingFace-compatible RWKV weights

RWKV wiki

Community wiki (with guide and FAQ)